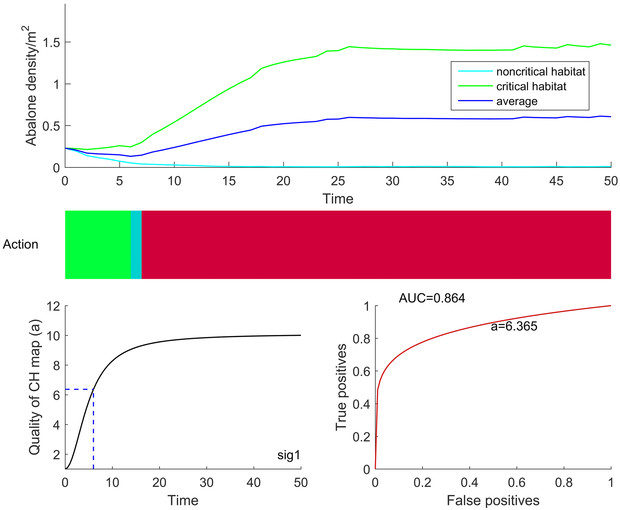

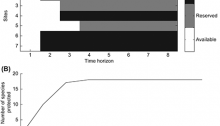

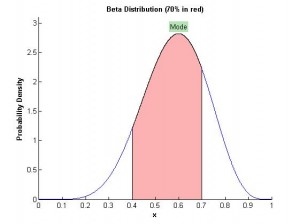

The latest addition to my research interest on how time influences our decision-making process just came out in Conservation Letters (Martin et al, 2016, Free access). We demonstrate once again, that time spent gathering more information to make better decision is beneficial to a point. Aside from the massive modelling effort we had to go through (see lessons learned below), our conclusion summarizes our main point:

It may be tempting to assume that more information is of value for its own sake, in a decision-making context information has value only when it leads to a change in actions taken, specifically, a change with enough benefit to species protection to outweigh the cost of obtaining the information. In the often contentious environment of endangered species decision making, parties who benefit from delay in taking action often lobby strategically for more information, not because they are concerned for the efficacy of protective actions but because their interests are best served by delaying protection as long as possible. In this environment, reminding everyone that more information does not always translate into more efficacious action may help strike a better balance between action and research. When it comes to species conservation, time is the resource that matters most. It is also the resource we cannot get more of.

Martin T.G., Camaclang A.E., Possingham H.P., Maguire L., Chadès I. (2016) Timing of critical habitat protection matters. Conservation Letters In Press, DOI: 10.1111/conl.12266 (OPEN ACCESS, PDF)

Lessons learned:

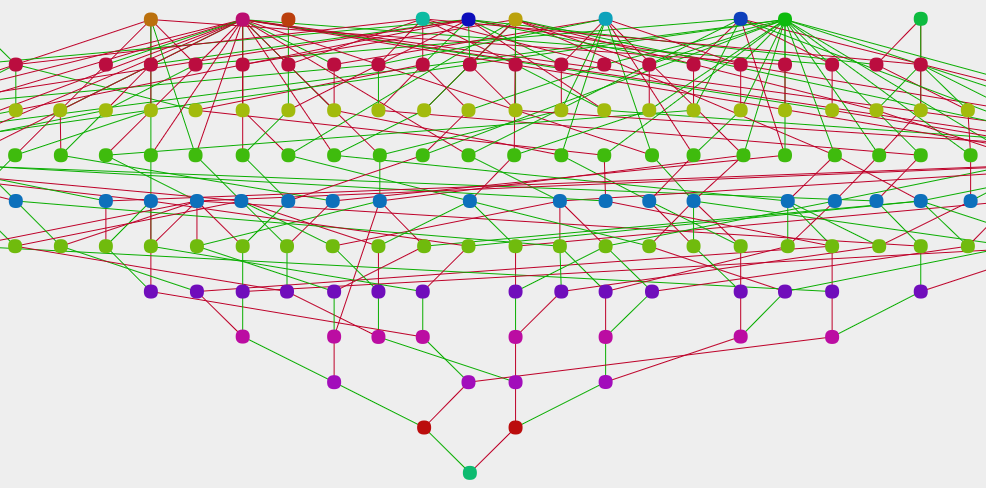

This paper was about 5 years in the making, along the way I have learnt a big deal about using AI reinforcement learning tools for this problem. Once more I had to give up using RL and opted for an exhaustive search to find the optimal stopping time – which was really disappointing considering the amount of time I spent on it. As painful as it sounds, I was using the wrong approach. On top of my head, the hurdles were:

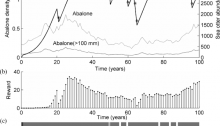

1) the matrix population model of the northern abalone species exhibit some time lag, making the process non-Markovian;

2) the Q-Learning approach took way too long to find the optimal stopping time considering the amount of different configurations I had to go through;

3) the near optimal strategies of the Q-Learning approach were not consistent due to lack of convergence;

4) it was way faster to perform an exhaustive search, and this should have been my first solution for a decision problem that was quite simple to solve.

I am glad this paper is out in Conservation Letters for everyone to enjoy. Well done to all my co-authors for their support and hard work on this piece – especially Tara, for pushing it through the line.